2D-3D Imagery Fusion and Reconstruction

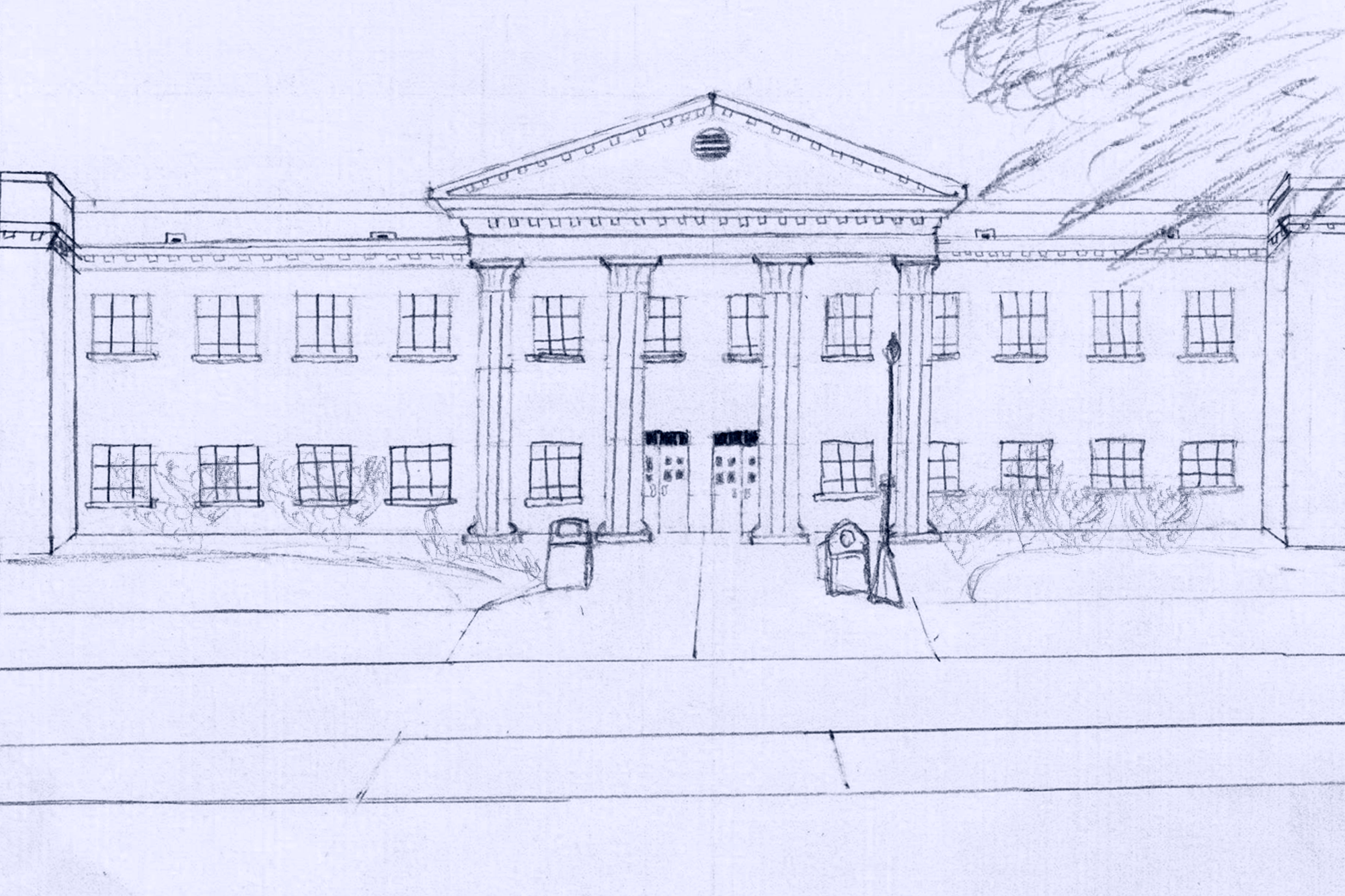

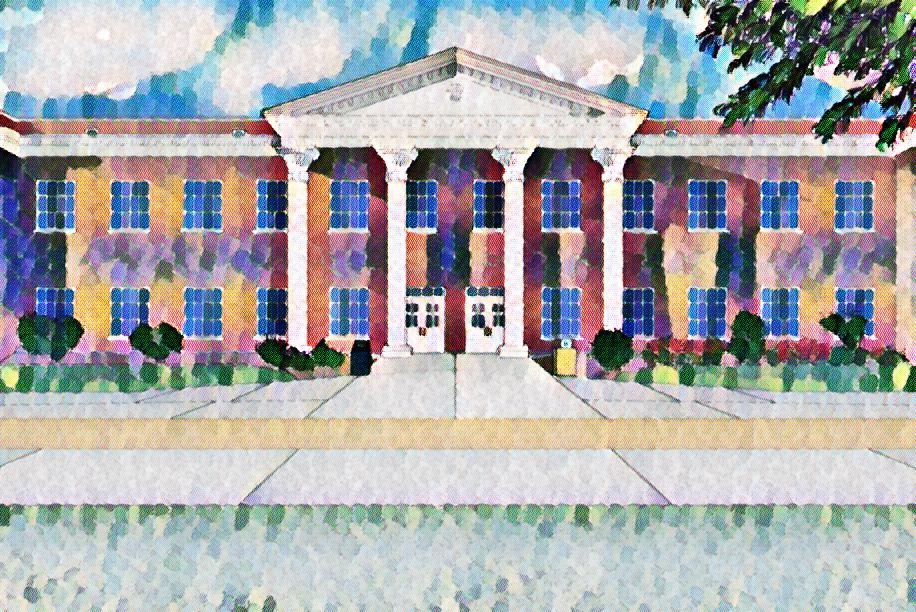

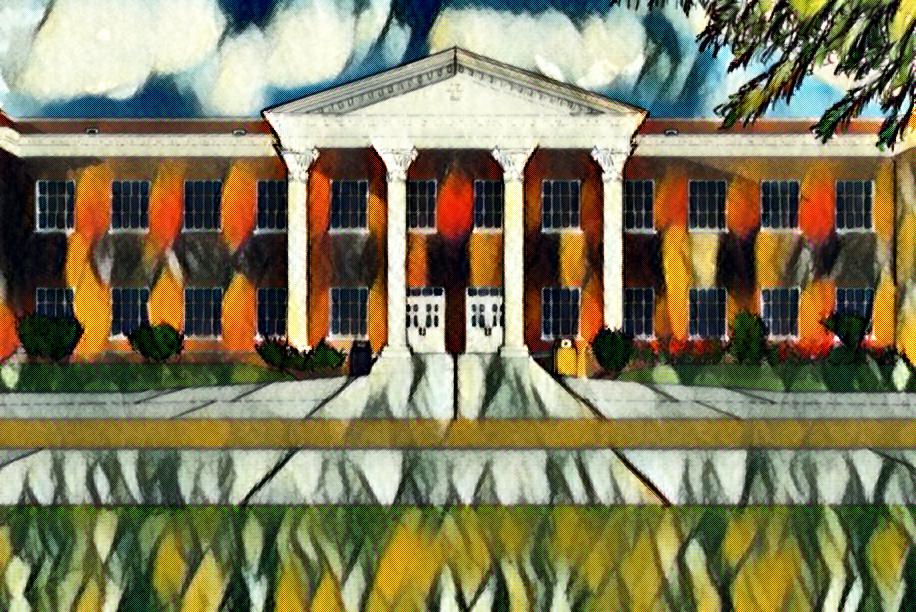

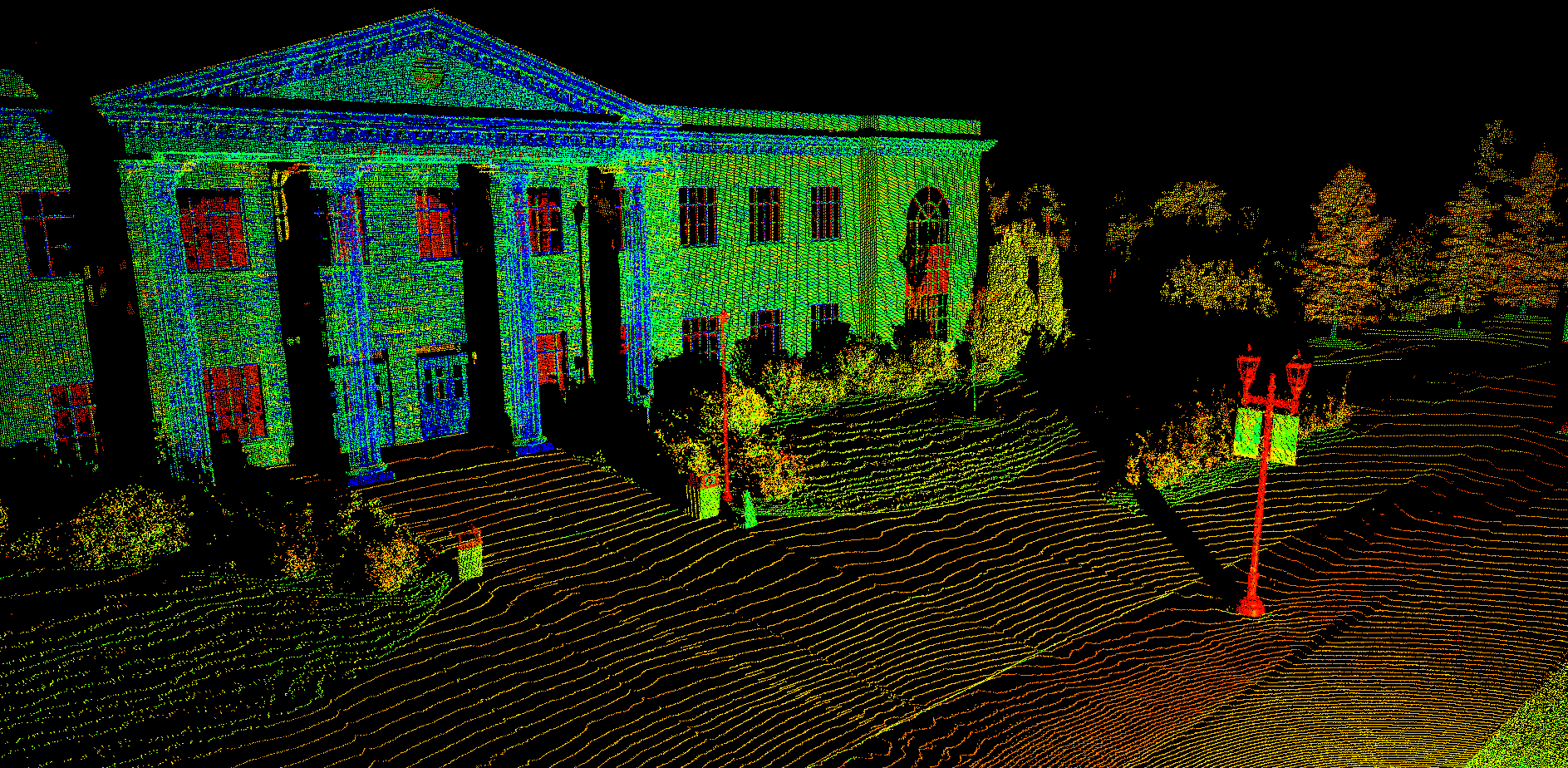

We are building a framework for registering 2D visual documentation with 3D LIDAR (LIght Detection And Ranging) scans. The project goal is aligning complementary but different image sources to create 3D reconstructions. These virtual models aid users in better visualizing 2D datasets and understanding environments. Our research group is studying the commonalities among photographs, videos, historical documents, architectural plans, artwork, and LIDAR scans to identify and quantify features that can be used for matching. Though the human visual system can easily identify the same building in, for example, a painting, video, and 3D scan, the differences in how these modalities are stored digitally at a pixel level make automatic matching a very challenging task. The ability to align different data types, and in turn create 3D reconstructions, can aid in scene understanding by bringing depth and perspective to otherwise flat imagery.

The ultimate goal is to be able to take 3D scans and photos of an area (historical building, state park, university campus, etc.) and create a 3D digital model that users can view and interactive with from anywhere. This will enable designers, engineers, and researchers to digitally obtain real-world measurements that would otherwise be cost- or time-prohibitive or dangerous to collect. Making models and images sets like this widely available will also enable people to see and learn more about their community and other regions when it’s not feasible to physically visit other places.

Students: Trevor Tackett, Veronica Meyes, Steven Allen